上传镜像(可选)

export tag=7.17.3

images=(

docker.elastic.co/elasticsearch/elasticsearch:${tag}

docker.elastic.co/kibana/kibana:${tag}

docker.elastic.co/beats/filebeat:${tag}

)

# 上传至harhar

export https_proxy="192.168.10.250:7890" # 代理

for image in ${images[@]}; do skopeo copy docker://${image} docker://registry.sundayhk.com/elastic/${image#*/}; done

部署Elasticsearch

helm及添加storageClass

helm repo add elastichttps://helm.elastic.co

helm pull elastic/elasticsearch # --version 7.17.3

elasticsearch-7.17.3.tgz

tar xf elasticsearch-7.17.3.tgz

cd elasticsearch

vim values.yaml

volumeClaimTemplate:

storageClassName: "nfs-client"

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 30Gi

安装ES

helm install elasticsearch . -n logging --create-namespace

查看状态

[root@master1 elasticsearch]# kubectl get sts -n logging -owide

NAME READY AGE CONTAINERS IMAGES

elasticsearch-master 3/3 3m31s elasticsearch docker.elastic.co/elasticsearch/elasticsearch:7.17.3

[root@master1 elasticsearch]# kubectl get pod -n logging

NAME READY STATUS RESTARTS AGE

elasticsearch-master-0 1/1 Running 0 5m19s

elasticsearch-master-1 1/1 Running 0 5m19s

elasticsearch-master-2 1/1 Running 0 5m19s

[root@master1 elasticsearch]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client (default) cluster.local/nfs-subdir-external-provisioner Delete Immediate true 7h2m

[root@master1 elasticsearch]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

elasticsearch-master-elasticsearch-master-0 Bound pvc-b34cbcbf-fd17-4b0d-b425-6916b9e0539b 30Gi RWO nfs-client 9m23s

elasticsearch-master-elasticsearch-master-1 Bound pvc-d25e441f-28c2-4b51-8ae8-3b923c3bbb43 30Gi RWO nfs-client 9m23s

elasticsearch-master-elasticsearch-master-2 Bound pvc-bdd93171-d767-4cc2-a272-f1a6f26b99d0 30Gi RWO nfs-client 9m23

[root@master1 ~]# kubectl get svc -nlogging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch-master ClusterIP 10.230.47.55 <none> 9200/TCP,9300/TCP 5m40

elasticsearch-master-headless ClusterIP None <none> 9200/TCP,9300/TCP 5m40

[root@master1 ~]# curl 10.230.47.55:9200

{

"name" : "elasticsearch-master-0",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "kt5yGerNRvqIsCwQ5ux4Cg",

"version" : {

"number" : "7.17.3",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "5ad023604c8d7416c9eb6c0eadb62b14e766caff",

"build_date" : "2022-04-19T08:11:19.070913226Z",

"build_snapshot" : false,

"lucene_version" : "8.11.1",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

curl 10.230.47.55:9200/_cat/nodes

curl 10.230.47.55:9200/_cat/health

curl 10.230.47.55:9200/_cat/master

curl 10.230.47.55:9200/_cat/indices

部署Kibana

helm pull elastic/kibana

tar xf kibana-7.17.3.tgz

cd kibana

修改kibana默认配置文件/usr/share/kibana/kibana.yaml

vim values.yaml

kibanaConfig:

kibana.yml: |

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.hosts: ["http://elasticsearch-master:9200"]

开启ingress

ingress:

enabled: true

className: "nginx"

pathtype: ImplementationSpecific

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

hosts:

- host: kibana-kibana

paths:

- path: /

helm install kibana ./ -n logging --create-namespace

[root@master1 filebeat]# kubectl get pods -l app=kibana -nlogging

NAME READY STATUS RESTARTS AGE

kibana-kibana-f6bfc696-x56sw 1/1 Running 0 9m5s

部署Filebeat

helm install filebeat elastic/filebeat -nlogging --create-namespace

[root@master1 ~]# kubectl get pod -nlogging -l app=filebeat-filebeat

NAME READY STATUS RESTARTS AGE

filebeat-filebeat-78sqk 1/1 Running 0 11m

filebeat-filebeat-7hk9l 1/1 Running 0 11m

filebeat-filebeat-hlrcw 1/1 Running 0 11m

filebeat-filebeat-ql8m8 1/1 Running 0 11m

filebeat-filebeat-xgm76 1/1 Running 0 11m

filebeat-filebeat-xmdcm 1/1 Running 0 11m

Kibana Dashboard

解析kibana-kibana对应ingress host

这里使用三台ingress-nginx,使用192.168.10.221-223任一个IP都可以

[root@master1 ~]# kubectl get pod -n ingress-nginx -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-controller-d6qg8 1/1 Running 0 10h 192.168.10.223 master3 <none> <none>

ingress-nginx-controller-h9gt7 1/1 Running 0 10h 192.168.10.221 master1 <none> <none>

ingress-nginx-controller-xpq2q 1/1 Running 0 10h 192.168.10.222 master2 <none> <none>

本地配置hosts

192.168.10.221 kibana-bibana

网页访问 http://kibana-kibana

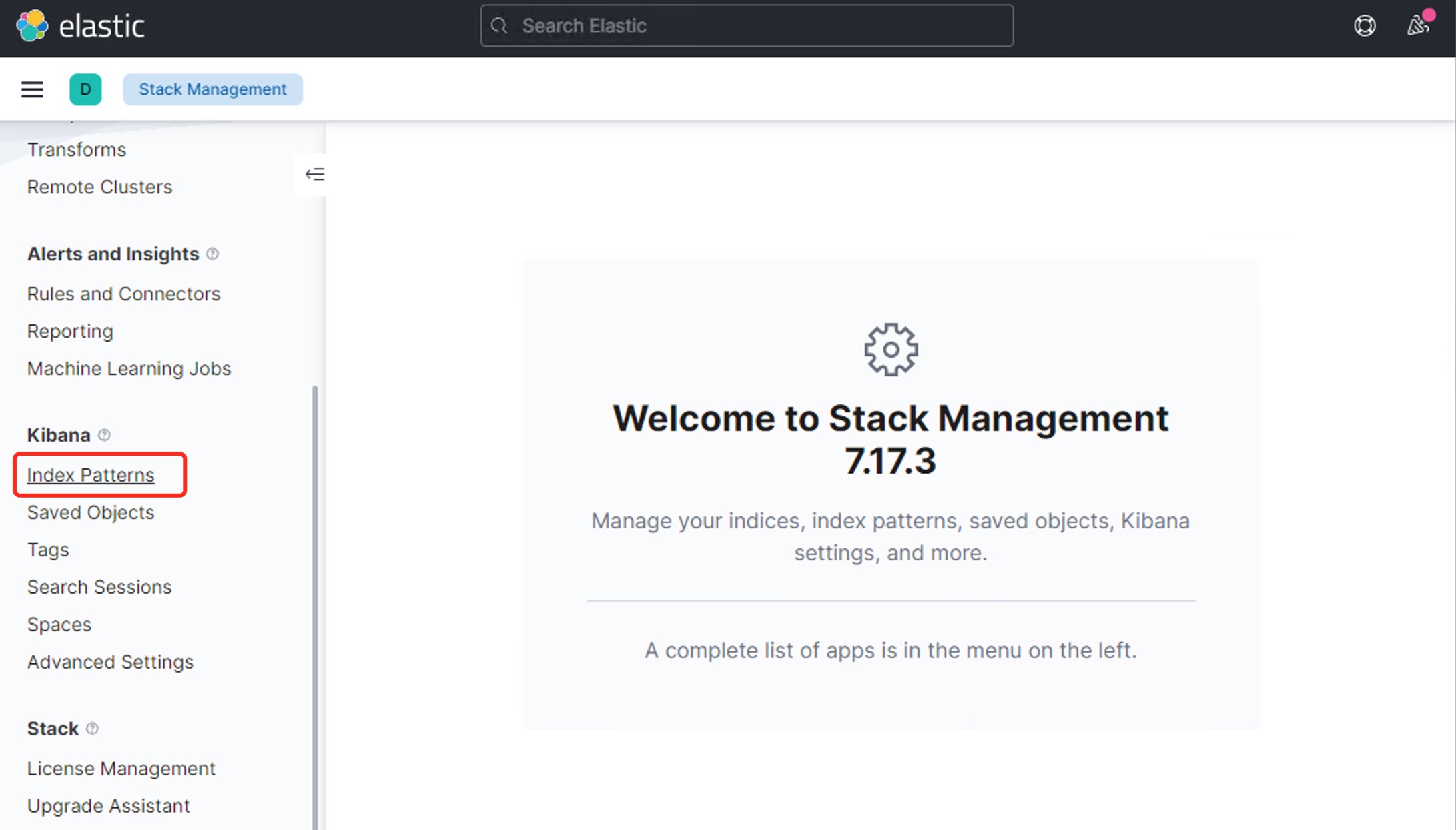

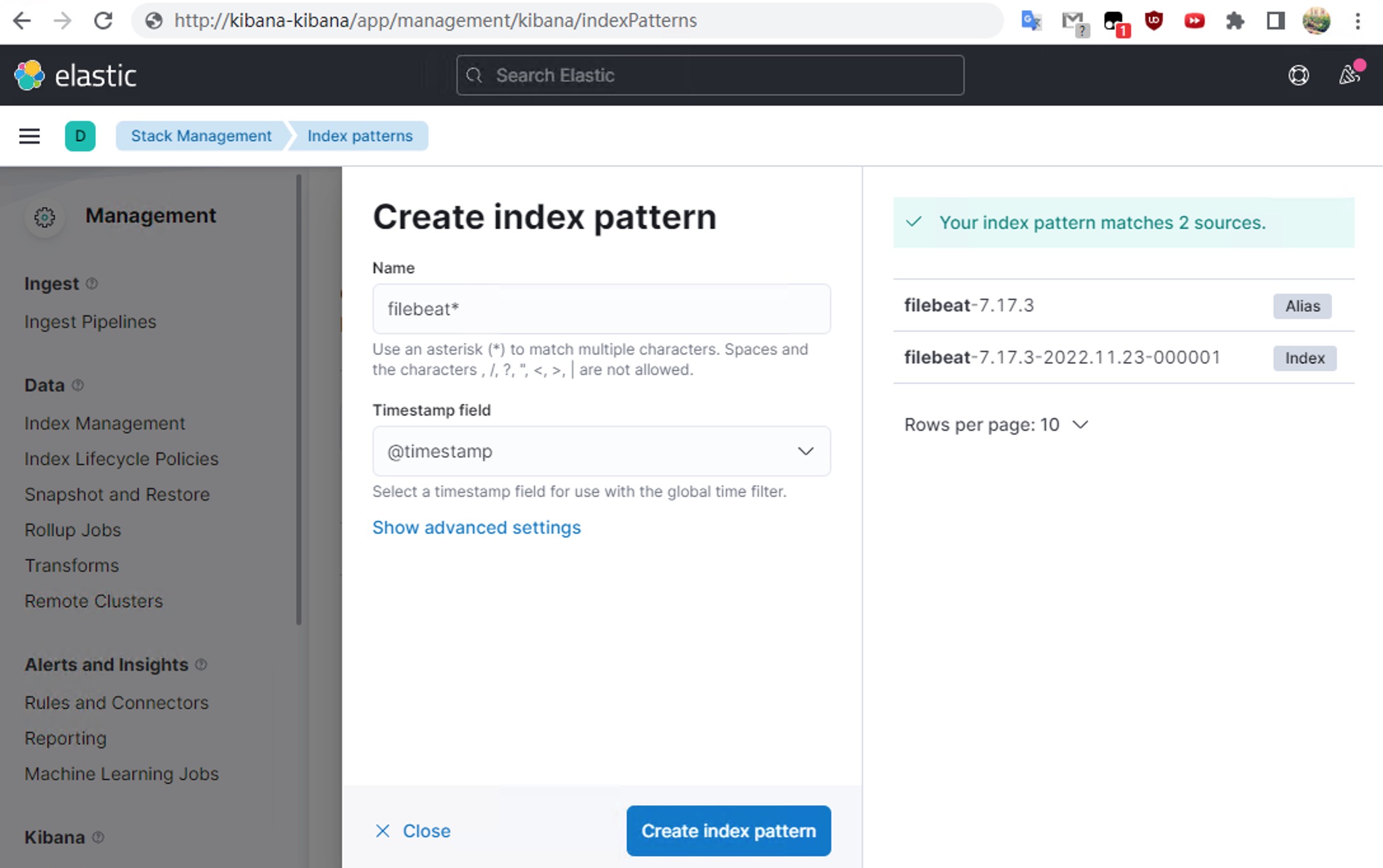

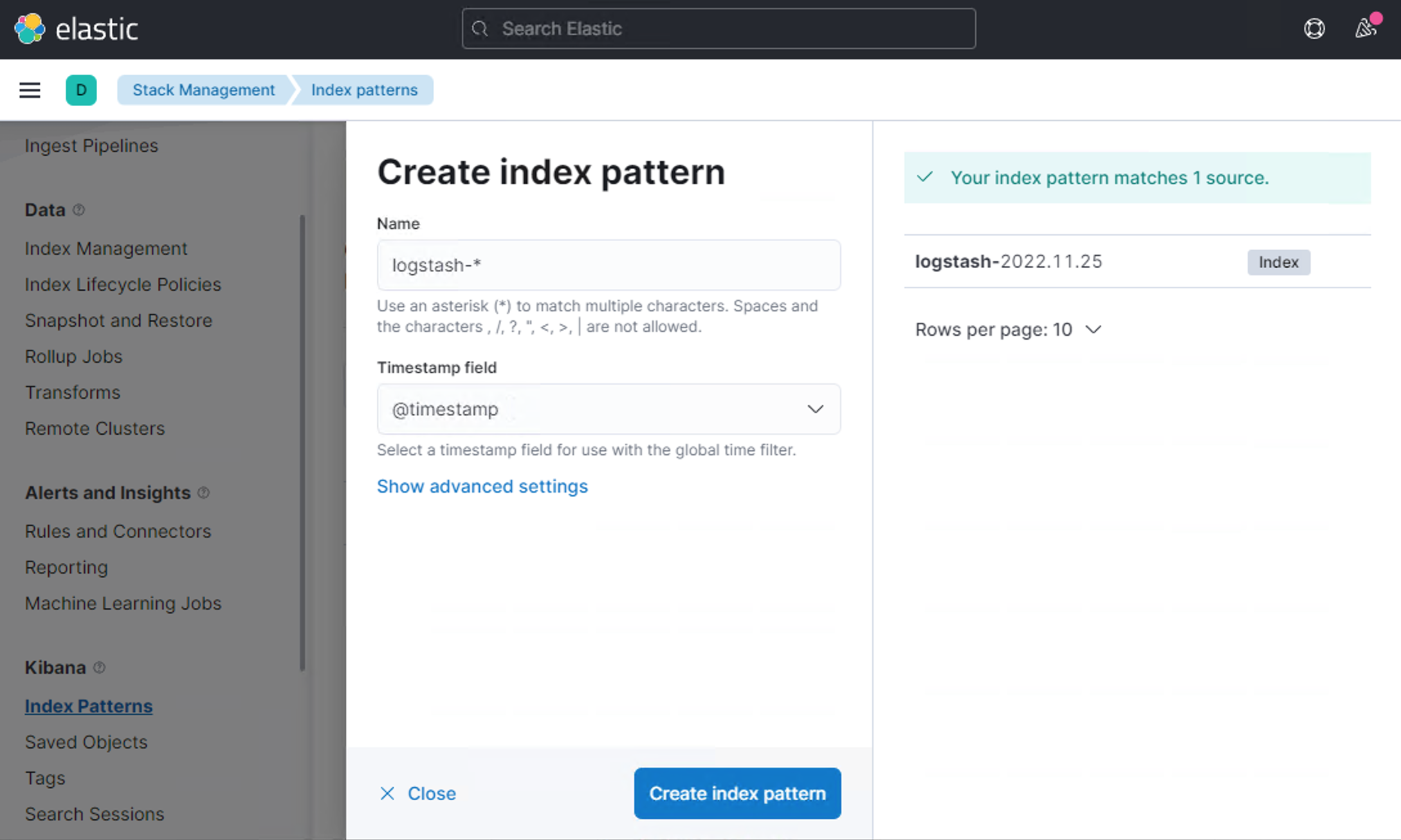

Create index pattern

Create index pattern

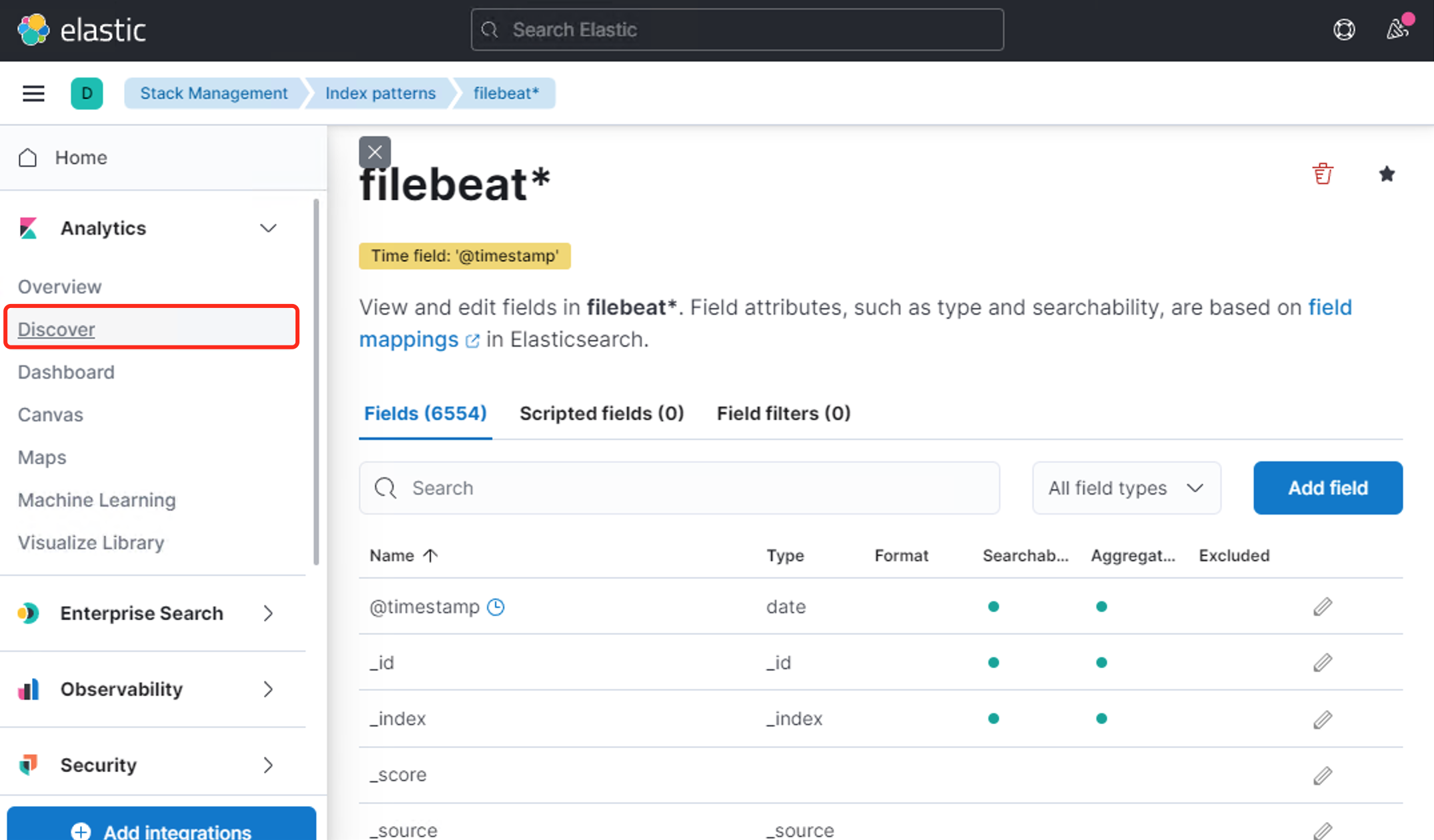

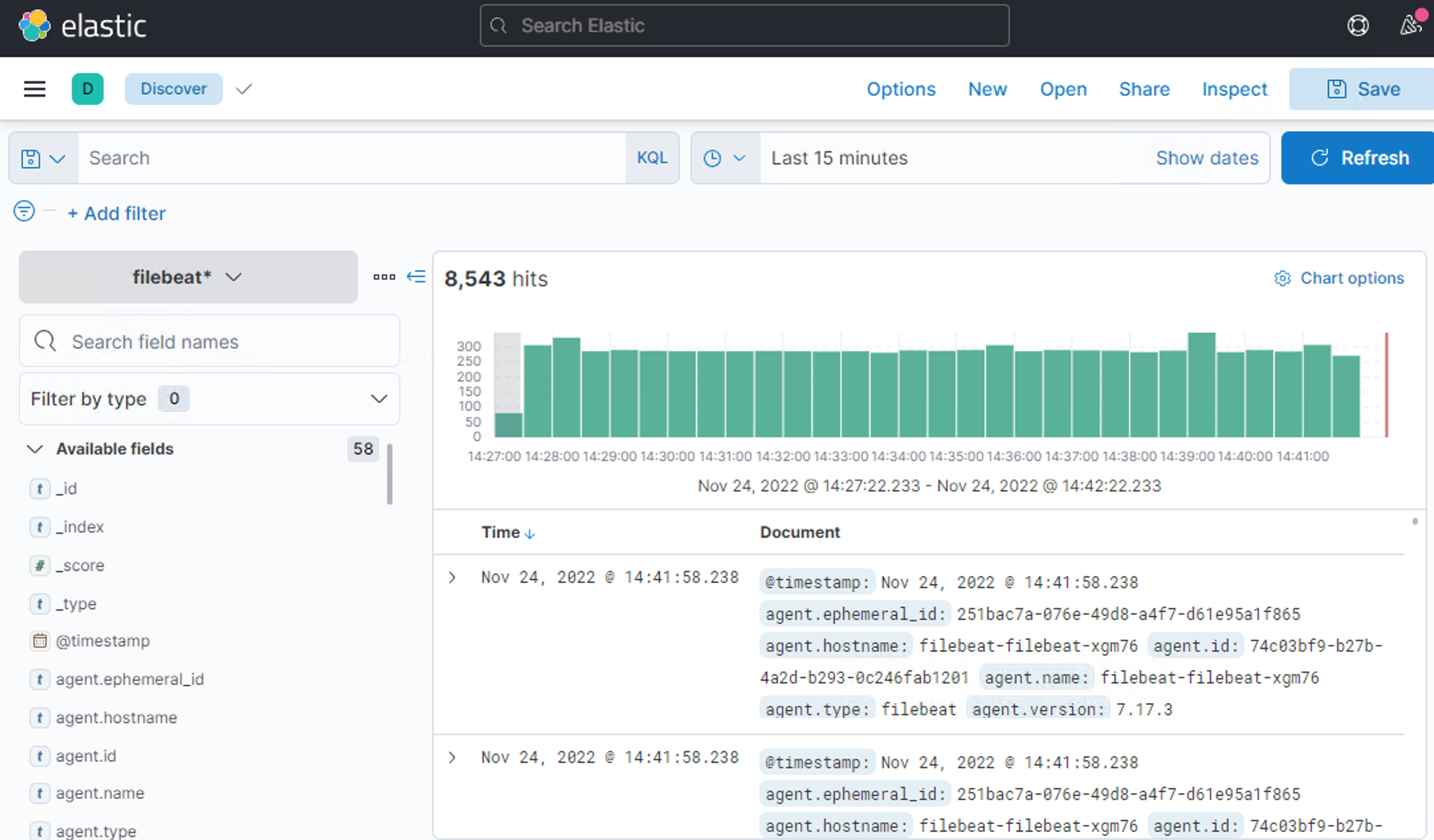

Discover查看

Discover查看

Fluent-bit

卸载filebeat,使用更轻量的日志收集工具fluent-bit

helm uninstall filebeat -n logging

对于 Kubernetes v1.21 及以下版本

kubectl create namespace logging

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-service-account.yaml

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-role.yaml

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-role-binding.yaml

对于 Kubernetes v1.22 及以下版本

kubectl create namespace logging

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-service-account.yaml

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-role-1.22.yaml

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/fluent-bit-role-binding-1.22.yaml

Fluent Bit to Elasticsearch

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/output/elasticsearch/fluent-bit-configmap.yaml

# 注意修改fluent-bit-ds.yaml中的FLUENT_ELASTICSEARCH_HOST

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/output/elasticsearch/fluent-bit-ds.yaml

Fluent Bit提供的模版默认假定日志由 Docker 接口标准格式化。这里使用的是Containerd要调整configmap Parser解析器为cri

vim fluent-bit-configmap.yaml

input-kubernetes.conf: |

[INPUT]

Name tail

Tag kube.*

Path /var/log/containers/*.log

Parser cri # 由docker调整为cri

DB /var/log/flb_kube.db

Mem_Buf_Limit 100MB # 调大

Skip_Long_Lines On

Refresh_Interval 10

[PARSER]

# http://rubular.com/r/tjUt3Awgg4

Name cri

Format regex

Regex ^(?<time>[^ ]+) (?<stream>stdout|stderr) (?<logtag>[^ ]*) (?<message>.*)$

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L%z

Fluent Bit to Kafka

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/output/kafka/fluent-bit-configmap.yaml

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/output/kafka/fluent-bit-ds.yaml

kubectl create -f https://raw.githubusercontent.com/fluent/fluent-bit-kubernetes-logging/master/output/elasticsearch/fluent-bit-ds.yaml

[root@master1 fluent-bit]# kubectl get pod -n logging -l k8s-app=fluent-bit-logging

NAME READY STATUS RESTARTS AGE

fluent-bit-29v82 1/1 Running 0 7m15s

fluent-bit-6dw9h 1/1 Running 0 7m15s

fluent-bit-6jrbm 1/1 Running 0 7m15s

fluent-bit-b8xng 1/1 Running 0 7m15s

fluent-bit-r5r4c 1/1 Running 0 7m15s

fluent-bit-v7vbv 1/1 Running 0 7m15s

curl 10.230.47.55:9200/_cat/indices

...

green open logstash-2022.11.25 4Ei45nXXS9e6h6iPU-UY6w 1 1 649200 0 178.4mb 89.2mb

设置为默认,不然Discover要选下来源

设置为默认,不然Discover要选下来源

对于Fluentd/Fluent Bit官方都建议采用Daemonset部署,都是通过ConfigMap来管理采集配置,Fluentd/Fluent Bit容器挂载ConfigMap来进行配置读取。不同的是,Fluentd提供的模版集成度更高,对于每种输出场景,都有定制的镜像,一般情况用户只需要注入一些环境变量即可完成采集。 此外,两者也都提供了Docker、CRI的标准输出采集支持,但是都需要手动修改模版才可以。 Fluentd/Fluent Bit官方虽然提供了一些K8s的采集部署方案,但是总体来说自动化程度不高,操作也比较繁琐。所以也就催生出了各类Operator,比较有名的是Fluent Operator、Logging Operator

Fluentd/FluentBit K8s日志采集方案介绍

云原生日志采集管理方案–Logging Operator

云原生日志采集管理方案–Fluent Operator

Fluent Operator 入门教程