基础环境配置

| IP地址 | 主机名 | 服务 | 配置 |

|---|---|---|---|

| 192.168.1.171 | k8s-01 | k8s-master、containerd、keepalived、nginx | 2c4g |

| 192.168.1.172 | k8s-02 | k8s-master、containerd、keepalived、nginx | 2c4g |

| 192.168.1.173 | k8s-03 | k8s-master、containerd、keepalived、nginx | 2c4g |

| 192.168.1.174 | k8s-04 | k8s-node、containerd | 2c2g |

| 192.168.1.175 | k8s-05 | k8s-node、containerd | 2c2g |

- VIP: 192.168.1.170 域名:apiserver.sundayhk.com

- kube-apiserver 三台节点

- kube-schedulet 三台节点

- kube-controller-manager 三台节点

- ETCD 三台节点

版本

| 服务名称 | 版本号 | |

|---|---|---|

| 内核 | 5.19.3-1.el7.elrepo.x86_64 | |

| containerd | v1.6.4 | |

| ctr | 1.6.4 | |

| k8s | 1.23.5 |

初始化环境

批量修改主机名以及免密

hostnamectl set-hostname k8s-01 #所有机器按照要求修改

bash #刷新主机名

#配置host

cat >> /etc/hosts <<EOF

192.168.1.171 k8s-01

192.168.1.172 k8s-02

192.168.1.173 k8s-03

192.168.1.174 k8s-04

192.168.1.175 k8s-05

EOF

#设置k8s-01为分发机 (只需要在k8s-01服务器操作即可)

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y expect

#分发公钥 这里密码为123456,请根据需求自行更改

ssh-keygen -t rsa -P "" -f /root/.ssh/id_rsa

export mypass=123456

name=(k8s-01 k8s-02 k8s-03 k8s-04 k8s-05)

for i in ${name[@]};do expect -c "

spawn ssh-copy-id -i /root/.ssh/id_rsa.pub root@$i

expect {

\"*yes/no*\" {send \"yes\r\"; exp_continue}

\"*password*\" {send \"$mypass\r\"; exp_continue}

\"*Password*\" {send \"$mypass\r\";}

}";done

所有master和node节点关闭Selinux、iptables、swap分区

systemctl stop firewalld

systemctl disable firewalld

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat

iptables -P FORWARD ACCEPT

swapoff -a

sed -i '/ swap / s/^(.*)$/#1/g' /etc/fstab

setenforce 0

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

所有master和node节点配置yum源

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum clean all

yum makecache

新安装服务器安装下面的软件包,解决依赖问题

yum -y install gcc gcc-c++ make autoconf libtool-ltdl-devel gd-devel freetype-devel libxml2-devel libjpeg-devel libpng-devel openssh-clients openssl-devel curl-devel bison patch libmcrypt-devel libmhash-devel ncurses-devel binutils compat-libstdc++-33 elfutils-libelf elfutils-libelf-devel glibc glibc-common glibc-devel libgcj libtiff pam-devel libicu libicu-devel gettext-devel libaio-devel libaio libgcc libstdc++ libstdc++-devel unixODBC unixODBC-devel numactl-devel glibc-headers sudo bzip2 mlocate flex lrzsz sysstat lsof setuptool system-config-network-tui system-config-firewall-tui ntsysv ntp pv lz4 dos2unix unix2dos rsync dstat iotop innotop mytop telnet iftop expect cmake nc gnuplot screen xorg-x11-utils xorg-x11-xinit rdate bc expat-devel compat-expat1 tcpdump sysstat man nmap curl lrzsz elinks finger bind-utils traceroute mtr ntpdate zip unzip vim wget net-tools

加载 br_netfilter 模块

由于开启内核 ipv4 转发需要加载 br_netfilter 模块

#每台节点

modprobe br_netfilter

modprobe ip_conntrack

将上面的命令临时生效,重启模块失效,下面是开机自动加载模块的方式。新建 /etc/rc.sysinit 文件和/etc/sysconfig/modules/ 文件 添加权限

echo "modprobe br_netfilter" >/etc/sysconfig/modules/br_netfilter.modules

echo "modprobe ip_conntrack" >/etc/sysconfig/modules/ip_conntrack.modules

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

chmod 755 /etc/sysconfig/modules/ip_conntrack.modules

cat >>/etc/rc.sysinit<<EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules ; do

[ -x $file ] && $file

done

EOF

优化内核参数

cat > kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.overcommit_memory=1 # 不检查物理内存是否够用

vm.panic_on_oom=0 # 开启 OOM

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

#分发到所有master和node节点

for i in k8s-02 k8s-03 k8s-04 k8s-05; do

scp kubernetes.conf root@$i:/etc/sysctl.d/

ssh root@$i sysctl -p /etc/sysctl.d/kubernetes.conf

ssh root@$i echo '1' >> /proc/sys/net/ipv4/ip_forward

done

bridge-nf 使得netfilter可以对Linux网桥上的 IPv4/ARP/IPv6 包过滤。 比如,设置net.bridge.bridge-nf-call-iptables=1后,二层的网桥在转发包时也会被 iptables的 FORWARD 规则所过滤。常用的选项包括:

net.bridge.bridge-nf-call-arptables:是否在 arptables 的 FORWARD 中过滤网桥的 ARP 包

net.bridge.bridge-nf-call-ip6tables:是否在 ip6tables 链中过滤 IPv6 包

net.bridge.bridge-nf-call-iptables:是否在 iptables 链中过滤 IPv4 包

net.bridge.bridge-nf-filter-vlan-tagged:是否在 iptables/arptables 中过滤打了 vlan 标签的包。

安装ipvs

所有master和node节点

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

lsmod | grep -e ip_vs -e nf_conntrack

#查看是否已经正确加载所需的内核模块

所有master和node节点安装ipset

iptables是Linux服务器上进行网络隔离的核心技术,内核在处理网络请求时会对iptables中的策略进行逐条解析,因此当策略较多时效率较低;而是用IPSet技术可以将策略中的五元组(协议,源地址,源端口,目的地址,目的端口)合并到有限的集合中,可以大大减少iptables策略条目从而提高效率。测试结果显示IPSet方式效率将比iptables提高100倍

为了方面ipvs管理,这里安装一下ipvsadm

yum install ipvsadm ipset -y

所有master和node节点设置系统时区

timedatectl set-timezone Asia/Shanghai

#将当前的 UTC 时间写入硬件时钟

timedatectl set-local-rtc 0

#重启依赖于系统时间的服务

systemctl restart rsyslog

systemctl restart crond

更新及升级内核

升级内核 (可选方案)

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

#默认安装为最新内核

yum --enablerepo=elrepo-kernel install kernel-ml

#修改内核顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

#使用下面命令看看确认下是否启动默认内核指向上面安装的内核

grubby --default-kernel

#这里的输出结果应该为我们升级后的内核信息

reboot

#可以等所有初始化步骤结束进行reboot操作

更新软件包版本

yum update -y

Containerd 安装

所有master和node节点升级安装

# 卸载docker

sudo yum remove docker docker-client docker-client-latest \

docker-common docker-latest docker-latest-logrotate \

docker-logrotate docker-engine

在安装containerd前,我们需要优先升级libseccomp

在centos7中yum下载libseccomp的版本是2.3的,版本不满足我们最新containerd的需求,需要下载2.4以上的

# 卸载

rpm -qa | grep libseccomp

rpm -e libseccomp-devel-2.3.1-4.el7.x86_64 --nodeps

rpm -e libseccomp-2.3.1-4.el7.x86_64 --nodeps

#下载安装 高于2.4

wget http://rpmfind.net/linux/centos/8-stream/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm

rpm -ivh libseccomp-2.5.1-1.el8.x86_64.rpm

https://kubernetes.io/zh/docs/concepts/architecture/cri/ https://i4t.com/5435.html https://containerd.io/downloads/

- containerd-1.6.4-linux-amd64.tar.gz 只包含containerd

- cri-containerd-cni-1.6.4-linux-amd64.tar.gz 包含containerd以及cri runc等相关工具包

下载解压安装

wget https://github.com/containerd/containerd/releases/download/v1.6.4/cri-containerd-cni-1.6.4-linux-amd64.tar.gz

tar zxvf cri-containerd-cni-1.6.4-linux-amd64.tar.gz -C /

上面的文件都是二进制文件,直接移动到对应的目录并配置好环境变量就可以进行使用了。

配置containerd

mkdir /etc/containerd -p

containerd config default > /etc/containerd/config.toml

# 默认配置文件的位于/etc/containerd/config.toml

# --config,-c可以在启动守护程序时更改此路径

# 替换默认pause镜像地址

# 配置systemd作为容器的cgroup driver

# 配置snapshotter修改为native 解决containerd启动报错unknown service runtime.v1alpha2.RuntimeService

sed -i 's/k8s.gcr.io/registry.aliyuncs.com\/google_containers/' /etc/containerd/config.toml

sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml

sed -i 's/snapshotter = "overlayfs"/snapshotter = "native"/' /etc/containerd/config.toml

默认cri-containerd-cni包中会有containerd启动脚本已经解压到对应的目录,直接调用启动

systemctl enable containerd --now

ctr version

containerd --version

apiserver 高可用(单master可跳过)

所有master节点操作

方案:nginx+keeplived VIP: 192.168.1.111

# master节点追加hosts

cat >>/etc/hosts<< EOF

192.168.1.171 k8s-master-01

192.168.1.172 k8s-master-02

192.168.1.173 k8s-master-03

192.168.1.111 apiserver.sundayhk.com

EOF

安装配置nginx

yum install -y nginx

为了方便后面扩展插件,这里使用编译安装nginx

# 安装依赖

yum install pcre pcre-devel openssl openssl-devel gcc gcc-c++ automake autoconf libtool make wget vim lrzsz -y

wget https://nginx.org/download/nginx-1.20.2.tar.gz

tar xf nginx-1.20.2.tar.gz

cd nginx-1.20.2/

useradd nginx -s /sbin/nologin -M

./configure --prefix=/opt/nginx/ --with-pcre --with-http_ssl_module --with-http_stub_status_module --with-stream --with-http_stub_status_module --with-http_gzip_static_module

make && make install

# 配置systemd

cat >/usr/lib/systemd/system/nginx.service<<EOF

[Unit]

Description=The nginx HTTP and reverse proxy server

After=network.target sshd-keygen.service

[Service]

Type=forking

EnvironmentFile=/etc/sysconfig/sshd

ExecStartPre=/opt/nginx/sbin/nginx -t -c /opt/nginx/conf/nginx.conf

ExecStart=/opt/nginx/sbin/nginx -c /opt/nginx/conf/nginx.conf

ExecReload=/opt/nginx/sbin/nginx -s reload

ExecStop=/opt/nginx/sbin/nginx -s stop

Restart=on-failure

RestartSec=42s

[Install]

WantedBy=multi-user.target

EOF

# 开机启动

systemctl enable nginx --now

# 检查服务是否启动

ps -ef | grep nginx

nginx配置文件

cat >/opt/nginx/conf/nginx.conf<<EOF

user nginx nginx;

worker_processes auto;

events {

worker_connections 20240;

use epoll;

}

error_log /var/log/nginx_error.log info;

stream {

upstream kube-servers {

hash $remote_addr consistent;

server k8s-master-01:6443 weight=5 max_fails=1 fail_timeout=3s; #这里可以写IP

server k8s-master-02:6443 weight=5 max_fails=1 fail_timeout=3s;

server k8s-master-03:6443 weight=5 max_fails=1 fail_timeout=3s;

}

server {

listen 8443 reuseport;

proxy_connect_timeout 3s;

proxy_timeout 3000s; #加大timeout

proxy_pass kube-servers;

}

}

EOF

#分发到其它master节点

for i in k8s-02 k8s-03; do

scp nginx.conf root@$i:/opt/nginx/conf/

ssh root@$i systemctl restart nginx

done

配置Keeplived

高可用方案需要一个VIP,供集群内部访问

yum install -y keepalived

修改配置文件

- router_id 节点IP

- mcast_src_ip 节点IP

- virtual_ipaddress VIP

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id 192.168.1.171 # 节点标识,配置每台master自己的IP或hostname

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 8443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 251

priority 100

advert_int 1

mcast_src_ip 192.168.1.171 # 本机网卡IP master1

nopreempt

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.1.111 # VIP

}

}

EOF

健康检查脚本

vim /etc/keepalived/check_port.sh

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lt | grep $CHK_PORT | wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

启动keepalived

systemctl enable --now keepalived

测试vip是否正常

ping apiserver.sundayhk.com

kubeadm 安装配置

master节点操作

kubernetes阿里云源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装配置kubeadm kubelet kubectl

yum install -y kubeadm-1.23.5 kubelet-1.23.5 kubectl-1.23.5 --disableexcludes=kubernetes

systemctl enable kubelet --now

- 虽然kubeadm作为etcd节点的管理工具,但请注意kubeadm不打算支持此类节点的证书轮换或升级。

- 长期计划是使用etcdadm来工具来进行管理。

配置kubeadm文件

# 仅master1 配置即可,其他master,node join命令加入不需用到此配置文件

kubeadm config print init-defaults > ~/kubeadm-init.yaml

cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.171 # master1

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-01

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

extraArgs:

etcd-servers: https://192.168.1.171:2379,https://192.168.1.172:2379,https://192.168.1.173:2379

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 阿里源

kind: ClusterConfiguration

kubernetesVersion: 1.23.5

controlPlaneEndpoint: apiserver.xwx.cn:8443 #高可用地址或域名

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs # kube-proxy 模式

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

cgroupDriver: systemd # 配置 cgroup driver

logging: {}

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

检查配置文件是否有错误

kubeadm init --config kubeadm-init.yaml --dry-run

提前拉取镜像

kubeadm config images list --config kubeadm-init.yaml

kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.23.5

初始化

kubeadm init --config kubeadm-init.yaml --upload-certs

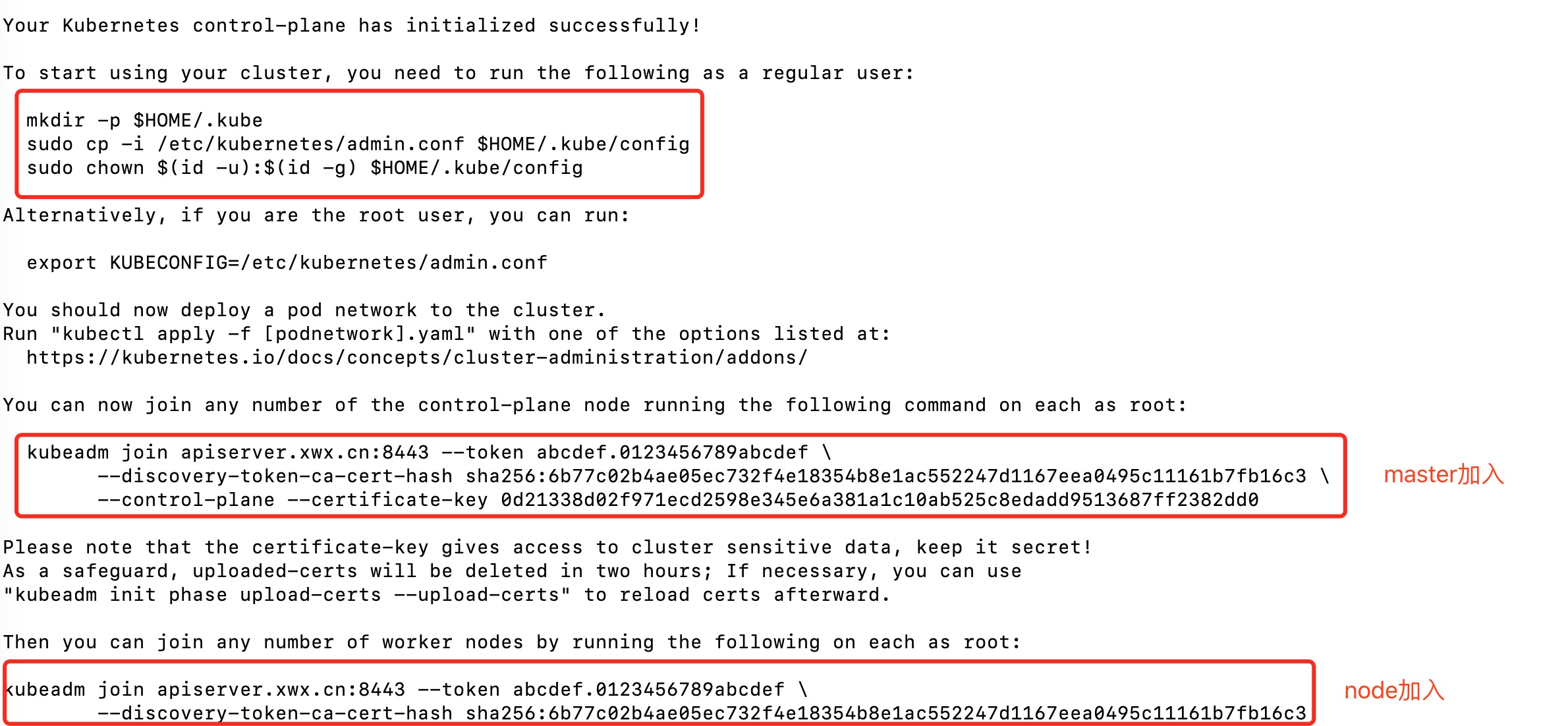

初始化完成

复制kubectl的kubeconfig,路径默认是~/.kube/config

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

# 初始化的配置文件为保存在configmap

kubectl -n kube-system get cm kubeadm-config -o yaml

执行kubectl就可以看到node了

[root@k8s-01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-01 Ready control-plane,master 10m v1.23.5

其他master加入

kubeadm join apiserver.xwx.cn:8443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash \

sha256:6b77c02b4ae05ec732f4e18354b8e1ac552247d1167eea0495c11161b7fb16c3 \

--control-plane --certificate-key 0d21338d02f971ecd2598e345e6a381a1c10ab525c8edadd9513687ff2382dd0

设置kubectl config文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-03 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-01 Ready control-plane,master 13m v1.23.5

k8s-02 Ready control-plane,master 124s v1.23.5

k8s-03 Ready control-plane,master 50s v1.23.5

node节点操作

kubernetes阿里云源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装配置kubeadm kubelet kubectl

yum install -y kubeadm-1.23.5 kubelet-1.23.5 kubectl-1.23.5 --disableexcludes=kubernetes

systemctl enable kubelet --now

node join加入

kubeadm join apiserver.xwx.cn:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6b77c02b4ae05ec732f4e18354b8e1ac552247d1167eea0495c11161b7fb16c3

后续需要添加更多node节点时,可以在master节点获取token

[root@k8s-01 ~]# kubeadm token create --print-join-command

kubeadm join apiserver.xwx.cn:8443 --token cboomw.45wo8gcq7rjlvrgg --discovery-token-ca-cert-hash sha256:6b77c02b4ae05ec732f4e18354b8e1ac552247d1167eea0495c11161b7fb16c3

查看集群

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-01 Ready control-plane,master 14h v1.23.5 192.168.1.171 <none> CentOS Linux 7 (Core) 5.19.1-1.el7.elrepo.x86_64 containerd://1.6.4

k8s-02 Ready control-plane,master 14h v1.23.5 192.168.1.172 <none> CentOS Linux 7 (Core) 5.19.1-1.el7.elrepo.x86_64 containerd://1.6.4

k8s-03 Ready control-plane,master 14h v1.23.5 192.168.1.173 <none> CentOS Linux 7 (Core) 5.19.1-1.el7.elrepo.x86_64 containerd://1.6.4

k8s-04 Ready <none> 13h v1.23.5 192.168.1.174 <none> CentOS Linux 7 (Core) 5.19.1-1.el7.elrepo.x86_64 containerd://1.6.4

k8s-05 Ready <none> 13h v1.23.5 192.168.1.175 <none> CentOS Linux 7 (Core) 5.19.1-1.el7.elrepo.x86_64 containerd://1.6.4

网络配置

这个时候其实集群还不能正常使用,因为还没有安装网络插件,接下来安装网络插件,可以在文档中选择网络插件,这里我们安装 flannel https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

cat >>kube-flannel.yaml<<EOF

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.0.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.17.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: rancher/mirrored-flannelcni-flannel:v0.17.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.17.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: rancher/mirrored-flannelcni-flannel:v0.17.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=eth0

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

EOF

- kubeadm.yaml文件中设置了podSubnet网段,此flannel配置文件中网段也要设置相同的

[root@k8s-01 ~]# kubectl apply -f kube-flannel.yaml

CNI插件问题 默认情况下containerd也会有一个cni插件,但是我们已经安装Flannel了,我们需要使用Flannel的cni插件,需要将containerd里面的cni配置文件进行注释,否则2个配置会产生冲突 因为如果这个目录中有多个 cni 配置文件,kubelet 将会使用按文件名的字典顺序排列的第一个作为配置文件,所以前面默认选择使用的是 containerd-net 这个插件。

mv /etc/cni/net.d/10-containerd-net.conflist /etc/cni/net.d/10-containerd-net.conflist.bak

ifconfig cni0 down && ip link delete cni0

systemctl daemon-reload

systemctl restart containerd kubelet

查看flannel pod

[root@k8s-01 ~]# kubectl get pod --all-namespaces -o wide | grep flannel

kube-system kube-flannel-ds-4hbdw 1/1 Running 0 13h 192.168.1.175 k8s-05 <none> <none>

kube-system kube-flannel-ds-9dzrj 1/1 Running 0 13h 192.168.1.172 k8s-02 <none> <none>

kube-system kube-flannel-ds-l948s 1/1 Running 1 (7m40s ago) 13h 192.168.1.174 k8s-04 <none> <none>

kube-system kube-flannel-ds-pjtx9 1/1 Running 0 13h 192.168.1.173 k8s-03 <none> <none>

kube-system kube-flannel-ds-qbh6r 1/1 Running 0 13h 192.168.1.171 k8s-01 <none> <none>

metrics-server

同步证书到所有node节点

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-04:/etc/kubernetes/pki/front-proxy-ca.crt

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-05:/etc/kubernetes/pki/front-proxy-ca.crt

https://github.com/kubernetes-sigs/metrics-server/releases

# sed -i 's/k8s.gcr.io\/metrics-server/registry.aliyuncs.com\/google_containers/' components.yaml

cat components.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt # change to front-proxy-ca.crt for kubeadm

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

image: registry.aliyuncs.com/google_containers/metrics-server:v0.5.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

- name: ca-ssl

mountPath: /etc/kubernetes/pki

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

- name: ca-ssl

hostPath:

path: /etc/kubernetes/pki

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

验证集群

测试dns

cat >> busybox_test.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:alpine

name: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

selector:

app: nginx

type: NodePort

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30001

---

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: busybox:1.28.3 # 注:1.28.3以上版本会解析故障

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF

[root@k8s-01 ~]# kubectl apply -f busybox_test.yaml

[root@k8s-01 ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/busybox 1/1 Running 14 (15m ago) 13h

pod/nginx-9fbb7d78-xc2wz 1/1 Running 0 13h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15h

service/nginx NodePort 10.105.222.103 <none> 80:30001/TCP 13h

[root@k8s-01 ~]# kubectl get pod --all-namespaces -o wide | grep nginx

default nginx-9fbb7d78-xc2wz 1/1 Running 0 13h 10.244.3.4 k8s-05 <none> <none>

[root@k8s-01 ~]# kubectl exec -ti busybox -- nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

测试nginx svc以及Pod内部网络通信是否正常

for i in k8s-01 k8s-02 k8s-03 k8s-04 k8s-05; do

echo $i

ssh root@$i curl -s 10.105.222.103 | grep "using nginx" #nginx svc ip

ssh root@$i curl -s 10.244.3.4 | grep "using nginx" #pod ip

done

访问宿主机nodePort

[root@k8s-01 ~]# curl -I apiserver.sundayhk.com:30001

HTTP/1.1 200 OK

Server: nginx/1.23.1

Date: Fri, 14 Oct 2022 07:57:11 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Tue, 19 Jul 2022 15:23:19 GMT

Connection: keep-alive

ETag: "62d6cc67-267"

Accept-Ranges: bytes

join token 过期

生成node join命令

# node join

[root@k8s-01 ~]# kubeadm token create --print-join-command

kubeadm join apiserver.xwx.cn:8443 --token rx8wzz.9ih17oy88v6dwwty --discovery-token-ca-cert-hash sha256:6b77c02b4ae05ec732f4e18354b8e1ac552247d1167eea0495c11161b7fb16c3

生成master join命令

# 获取join token

[root@k8s-01 ~]# kubeadm token create --print-join-command

kubeadm join apiserver.xwx.cn:8443 --token rx8wzz.9ih17oy88v6dwwty --discovery-token-ca-cert-hash sha256:6b77c02b4ae05ec732f4e18354b8e1ac552247d1167eea0495c11161b7fb16c3

# 获取upload-certs

[root@k8s-01 ~]# kubeadm init phase upload-certs --upload-certs

I1015 20:29:10.116058 60221 version.go:255] remote version is much newer: v1.25.3; falling back to: stable-1.23

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

6581d450fedceb490cfbeb263b9a019253033b7ebaadd938156a1b2cf8b43479

# 修改

[root@k8s-01 ~]# kubeadm join apiserver.xwx.cn:8443 --token rx8wzz.9ih17oy88v6dwwty --discovery-token-ca-cert-hash \

sha256:6b77c02b4ae05ec732f4e18354b8e1ac552247d1167eea0495c11161b7fb16c3 \

--control-plane --certificate-key 6581d450fedceb490cfbeb263b9a019253033b7ebaadd938156a1b2cf8b43479

安装命令行补全

yum install bash-completion -y

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

报错解决

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: time="2022-10-13T23:42:33+08:00" level=fatal msg="getting status of runtime: rpc error: code = Unimplemented desc = unknown service runtime.v1alpha2.RuntimeService"

解决:

/etc/containerd/config.toml

snapshotter = "overlayfs"改为snapshotter = "native"

参考

https://i4t.com/5488.html https://blog.csdn.net/qq_36002737/article/details/123678418 https://blog.csdn.net/hans99812345/article/details/123977441 https://blog.csdn.net/hans99812345/article/details/123977441