部署

git clone https://github.com/prometheus-operator/kube-prometheus.git

cd kube-prometheus

git checkout v0.11.0 # 切换kubernetes兼容版本

首先创建需要的命名空间和 CRDs,等待它们可用后再创建其余资源

kubectl apply --server-side -f manifests/setup

kubectl wait \

--for condition=Established \

--all CustomResourceDefinition \

--namespace=monitoring

kubectl apply -f manifests/

注: 这里要使用kubectl create,使用kubectl apply 会报错Too long: must have at most 262144 bytes

kubectl get servicemonitors --all-namespaces

kubectl get pod -n monitoring

kubectl get svc -n monitoring

访问dashboard

在release-0.11版本新增了NetworkPolicy,默认只允许kube-promethues下pod访问, 需修改或删除Networkpolicy规则,才能访问Service、NodePort、Ingress。

修改NetworkPolicy规则

kubectl get networkPolicy -n monitoring

cd /root/kube-prometheus/manifests

vim grafana-networkPolicy.yaml

spec:

egress:

- {}

ingress:

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: prometheus

- ipBlock: # 添加网段

cidr: 192.168.10.0/24 # 信任网段

ports:

- port: 3000

protocol: TCP

vim prometheus-networkPolicy.yaml

spec:

egress:

- {}

ingress:

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: prometheus

- ipBlock:

cidr: 192.168.10.0/24

ports:

- port: 9090

protocol: TCP

- port: 8080

protocol: TCP

vim alertmanager-networkPolicy.yaml

spec:

egress:

- {}

ingress:

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: prometheus

- ipBlock:

cidr: 192.168.10.0/24

ports:

- port: 9093

protocol: TCP

- port: 8080

protocol: TCP

方式1:修改为NodePort

kubectl patch svc grafana -p '{ "spec":{"type": "NodePort"} }' -n monitoring

kubectl patch svc alertmanager-main -p '{ "spec":{"type": "NodePort"} }' -n monitoring

kubectl patch svc prometheus-k8s -p '{ "spec":{"type": "NodePort"} }' -n monitoring

方式2:配置ingress

cat > prometheus-ingress.yaml << EOF

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: monitoring

name: prometheus-ingress

spec:

ingressClassName: nginx

rules:

- host: grafana.example.com

http:

paths:

- backend:

service:

name: grafana

port:

number: 3000

path: /

pathType: Prefix

- host: prometheus.example.com

http:

paths:

- backend:

service:

name: prometheus-k8s

port:

number: 9090

path: /

pathType: Prefix

- host: alertmanager.example.com

http:

paths:

- backend:

service:

name: alertmanager-main

port:

number: 9093

path: /

pathType: Prefix

EOF

kubectl apply -f prometheus-ingress.yaml

访问dashboard

Prometheus

Prometheus: http://promethues.example.com

[root@master1 manifests]# kubectl get pod -n monitoring | grep prometheus-k8s

prometheus-k8s-0 2/2 Running 0 128m

prometheus-k8s-1 2/2 Running 0 128m

两个Prometheus实例的,但实际上我们这里访问的时候始终是路由到后端的一个实例上去,因为这里的 Service 在创建的时候添加了 sessionAffinity: ClientIP 这样的属性,会根据 ClientIP 来做 session 亲和性,所以我们不用担心请求会到不同的副本上去

AlertManager

AlertManager: http://alertmanager.example.com

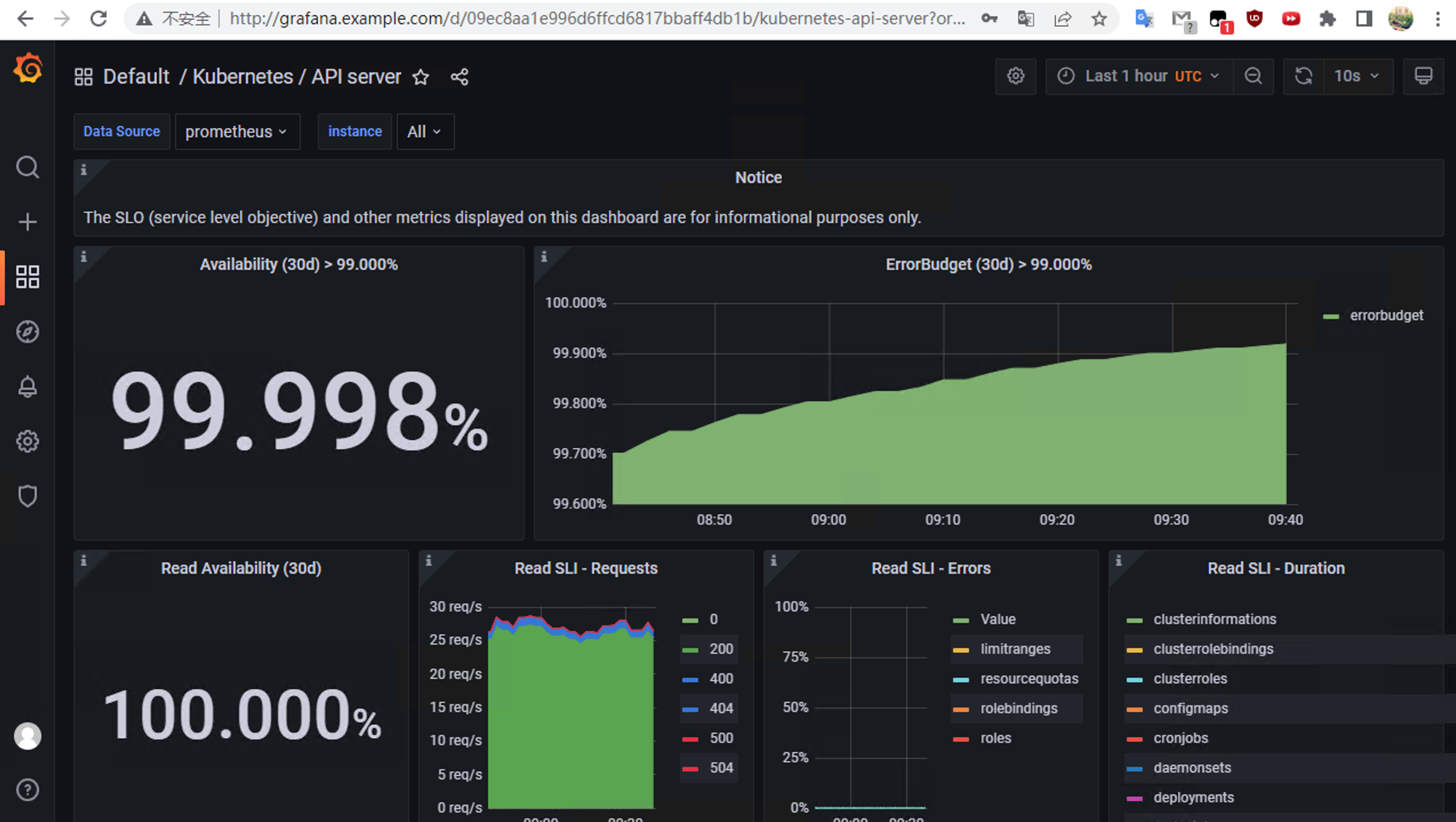

Grafana

Grafana: http://grafana.example.com 用户名和密码,都是admin

$ kubectl exec -it $(kubectl get pod -n monitoring -l app.kubernetes.io/name=grafana \

-o jsonpath='{.items[*].metadata.name}') -n monitoring -- sh

/usr/share/grafana $ grafana-cli plugins install grafana-kubernetes-app

kube-prometheus grafana dashboard时区默认为UTC,比北京时间慢了8小时,很不便于日常监控查看,这里可以修改

/root/kube-prometheus/manifests

sed -i 's/UTC/UTC+8/g' grafana-dashboardDefinitions.yaml

sed -i 's/utc/utc+8/g' grafana-dashboardDefinitions.yaml

kubectl apply -f grafana-dashboardDefinitions.yaml

AlterManager报警配置

Altermanager邮件报警

这里给出精简版本,详细可以参考kube-prometheus/manifests/alertmanager-secret.yaml

cat << EOF > alertmanager-secret.yaml

apiVersion: v1

kind: Secret

metadata:

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.24.0

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

global:

resolve_timeout: 5m

smtp_smarthost: "smtp.qq.com:465"

smtp_from: "xxx@qq.com" # 发件人邮箱

smtp_auth_username: "xxx@qq.com" # 发件人邮箱

smtp_auth_password: "xxxbnmqfesbdhj" # QQ授权码

smtp_hello: "qq.com"

smtp_require_tls: false

route:

group_by: ["alertname"]

group_interval: 5m

group_wait: 30s

receiver: default-receiver

repeat_interval: 12h

routes:

- receiver: "example-project"

match_re:

namespace: "^(example-project1|example-project2).*$" # 根据namespace进行区分报警

receivers:

- name: "default-receiver"

email_configs:

- to: "xxx@example.com" # 收件人邮箱,设置多个收件人

send_resolved: true

- to: "xxx@example.com"

send_resolved: true

- name: "example-project" # 跟上面定义的名字必须一样

email_configs:

- to: "xxx@example.com" # 收件人邮箱,也可以设置多个

send_resolved: true

- to: "xxx@example.com"

send_resolved: true

type: Opaque

EOF

kubectl apply -f alertmanager-secret.yaml

AlertManager钉钉报警

钉钉-机器人管理

复制生成的webhook

dingtalk deployment

cat << EOF > dingtalk-webhook.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

run: dingtalk

name: webhook-dingtalk

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

run: dingtalk

template:

metadata:

labels:

run: dingtalk

spec:

containers:

- name: dingtalk

image: timonwong/prometheus-webhook-dingtalk:v1.4.0

imagePullPolicy: IfNotPresent

args:

- --ding.profile=webhook1=https://oapi.dingtalk.com/robot/send?access_token=<替换成你的token>

ports:

- containerPort: 8060

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

labels:

run: dingtalk

name: webhook-dingtalk

namespace: monitoring

spec:

ports:

- port: 8060

protocol: TCP

targetPort: 8060

selector:

run: dingtalk

sessionAffinity: None

EOF

kubectl apply -f dingtalk-webhook.yaml

这里给出精简版本,详细可以参考kube-prometheus/manifests/alertmanager-secret.yaml

cat << EOF > alertmanager-dingtalk.yaml

apiVersion: v1

kind: Secret

metadata:

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.24.0

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

global:

resolve_timeout: 5m

route:

group_by: ['alertname']

group_interval: 5m

group_wait: 30s

receiver: "webhook"

repeat_interval: 12h

receivers:

#配置钉钉告警的webhook

- name: 'webhook'

webhook_configs:

- url: 'http://webhook-dingtalk.monitoring.svc.cluster.local:8060/dingtalk/webhook1/send'

send_resolved: true

type: Opaque

EOF

kubectl apply -f alertmanager-dingtalk.yaml

验证一下钉钉可以收到报警

# 启动一个错误的容器

kubectl run busybox --image=busybox

此条规则需要等待5分钟

Grafana导入模板ID: 3070

Kube-Prometheus 部署

安装配置

prometheus-book

kube-prometheus监控 controller-manager && scheduler 组件